Creating and Maintaining a Shared Knowledge Source

This project requires me to use a variety of tools that all need a “common basis of knowledge”. I asked Microsoft Copilot for what I should do to have a single source of truth for the many different tools. (As will often be the case on this project, I’m including my “discussions” with copilots about what we can do. Part of my journey that I want to share is how I’m using the tools.)

My prompt was:

Alright, question. I’m building a lot of “foundation” knowledge that I want to use for my projects. I’m using GitHub Copilot, Copilot Notebooks, Copilot Studio, and soon Azure AI Foundry. Do you know of any way I can build a single “reference source” that all 4 of those can use as foundational knowledge?

Great question, Jeremy. You’re working with a powerful mix of tools—GitHub Copilot, Copilot Notebooks, Copilot Studio, and soon Azure AI Studio (Foundry)—and it makes total sense to want a unified “foundation” that all of them can draw from.

Here’s a breakdown of what’s possible and where things stand today:

✅ What You Can Do Today

1. Centralize Knowledge in a GitHub Repo

- GitHub Copilot and Copilot Notebooks can both reference code, markdown, and structured data in your repo.

- You can create a

knowledge/folder with:.mdfiles for conceptual documentation.jsonor.yamlfor structured data.ipynbnotebooks for examples and workflows

This becomes your “source of truth” for foundational knowledge.

2. Use Azure AI Studio (Foundry) to Ingest That Repo

- Azure AI Studio allows you to bring your own data (BYOD) from:

- GitHub

- Azure Blob Storage

- SharePoint

- Websites

- You can ingest your GitHub repo or markdown files as a custom grounding source for your copilots.

3. Copilot Studio (Power Platform)

- You can upload files or connect to Dataverse, SharePoint, or Azure to ground your copilots.

- If your foundational knowledge is in a GitHub repo or Azure Blob, you can connect it via custom connectors or Azure OpenAI.

🔄 How to Make It Work Across All 4

To unify your foundation across all tools:

| Tool | How to Connect to Shared Knowledge |

|---|---|

| GitHub Copilot | Reads from repo context |

| Copilot Notebooks | Reads from repo + notebook cells |

| Copilot Studio | Upload files or connect to Azure/SharePoint |

| Azure AI Studio | Ingest GitHub, Blob, SharePoint, etc. |

So the best strategy is to:

- Build your foundational knowledge in a GitHub repo (markdown, JSON, YAML, notebooks).

- Ingest that repo into Azure AI Studio as a grounding source.

- Connect Copilot Studio to the same source via Azure or SharePoint.

🧠 Bonus: Add Metadata for Better Retrieval

Use frontmatter or structured headers in your markdown files like:

---

title: "Designing a smart extension dependency structure"

tags: ["solutionarchitecture", "monolith", "businesscentral", "dependencies"]

summary: "Using SOLID principles to consider application design."

---This improves semantic search and grounding accuracy across tools.

This was very useful, and a clear plan. Almost all of these I have a pretty clear understanding of how to do already, but this process is all about documenting the ideas. It sounded like what I needed, so now let’s have some “Step by Step”, since we’ll later use this for our knowledge repo’s “contributing” documentation too.

Can you resummarize the knowledge base creation, including recommended folder pathing and file types (with notation of purposes)? I also want a 2nd section outlining how I’ll need to keep all the sources updated, covering not only entering the new knowledge (ingestion) but also what steps I’ll need to do to make use of the new data (for example, GH Copilot, I’d just have to pull request) for each tooling.

This results in the response of:

Absolutely, Jeremy. Here’s a clear, structured guide to help you build and maintain your multi-domain knowledge base—tailored for your use across GitHub Copilot, Copilot Notebooks, Copilot Studio, and Azure AI Foundry.

🧱 Part 1: Knowledge Base Creation

📁 Recommended Folder Structure

Organize your GitHub repo like this:

/knowledge/

├── business-central/

│ ├── bc-capabilities.md # Functional breakdowns from the BC Capability Guide

│ ├── bc-roles.md # Role/responsibility mappings (VAR/ISV)

│ ├── bc-pipelines.md # DevOps, testing, and deployment practices

│ └── bc-lifecycle.md # Lifecycle, telemetry, and support strategies

├── worldbuilding/

│ ├── heroes.md # Hero backstories and business needs

│ ├── cities.md # Worldbuilding: cities, cultures, exports

│ └── aethernet-assembly.md # Wizard personas aligned to tech domains

├── industry-practices/

│ ├── partner-evolution.md # From "way behind" to best practices

│ ├── consulting-vs-product.md # VAR vs ISV models

│ └── org-roles.md # Functional org charts and responsibilities

├── teaching-framework/

│ ├── goals.md # Overall teaching goals and structure

│ ├── learning-paths.md # Tool-by-tool learning journeys

│ └── tool-alignment.md # Mapping tools to teaching content

├── shared-assets/

│ ├── glossary.yaml # Shared terms and definitions

│ ├── tags.json # Tagging system for semantic search

│ └── metadata.yaml # File-level metadata for ingestion📄 Recommended File Types & Purposes

| File Type | Purpose |

|---|---|

.md | Primary content: explanations, walkthroughs, lore, logic |

.ipynb | Interactive examples, prompt engineering, future Azure AI workflows |

.json | Structured data for tags, relationships, or semantic metadata |

.yaml | Configurations, glossary, ingestion metadata |

.pptx | Visual teaching aids (optional, for ingestion or reference) |

Use frontmatter in

.mdfiles for semantic enrichment:

---

title: "BC Roles and Responsibilities"

tags: ["business-central", "roles", "VAR", "ISV"]

summary: "Mapping of responsibilities across VAR and ISV models."

updated: 2025-07-19

---🔁 Part 2: Keeping It Updated

Here’s how to manage updates across your tools:

🧩 1. GitHub Copilot

- Trigger: Commit or pull request to the repo

- Action: Copilot automatically uses the latest repo context

- What You Do:

- Add/edit

.md,.ipynb,.json, etc. - Commit and push

- Optionally, tag releases for major updates

- Add/edit

📓 2. Copilot Notebooks

- Trigger: Open or reference updated files in the notebook

- Action: Notebooks use the latest content from the repo

- What You Do:

- Reference updated files in notebook cells

- Optionally, embed

.mdor.jsoncontent directly in cells

🧠 3. Azure AI Foundry

- Trigger: New data ingested into Azure Blob or Azure AI Search

- Action: Foundry uses updated embeddings for grounding

- What You Do:

- Upload updated files to Azure Blob (manually or via GitHub Action)

- Ensure AI Search indexer is scheduled or event-triggered

- Rebuild embeddings if using vector search

💬 4. Copilot Studio

- Trigger: Azure AI Search index refresh

- Action: Copilot Studio bots use updated knowledge

- What You Do:

- Ensure your AI Search index is connected to Copilot Studio

- Upload new files to Blob or update index source

- Optionally, reconfigure topics to use new tags or metadata

🛠️ Optional Automation Enhancements

| Task | Tool |

|---|---|

| Auto-upload to Azure Blob | GitHub Actions |

| Auto-index in Azure AI Search | Indexer with schedule or blob event trigger |

| Metadata tagging | YAML frontmatter or .json tag maps |

| Embedding refresh | Azure Cognitive Search skillset or Foundry pipeline |

Again, this is all super clear – I know how to handle lots of these steps. The only major downside I have is that Copilot Notebooks, while very powerful, only really “ground” their knowledge in the attached files or pages, so I’ll have to “reinjest” the files into Notebooks regularly. I hope they’ll eventually be able to at least reference the Azure AI Search toolings, giving a hook to the wider ecosystem.

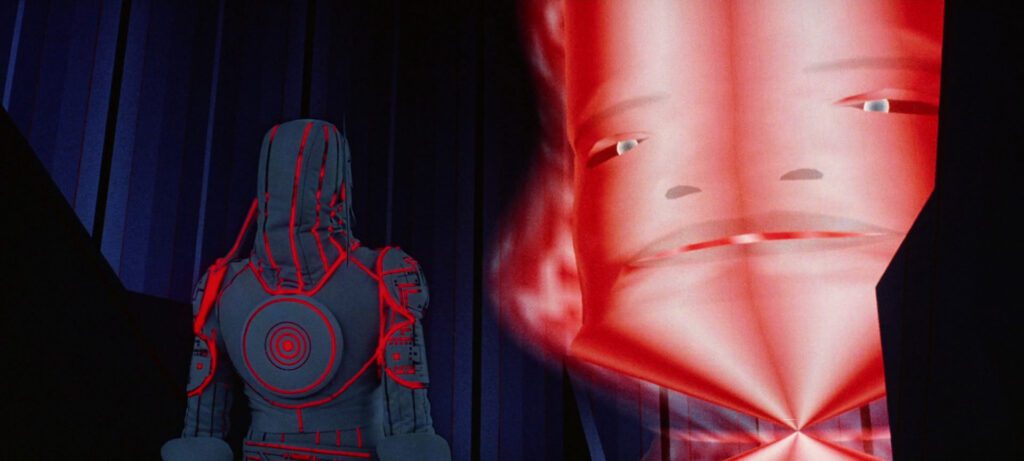

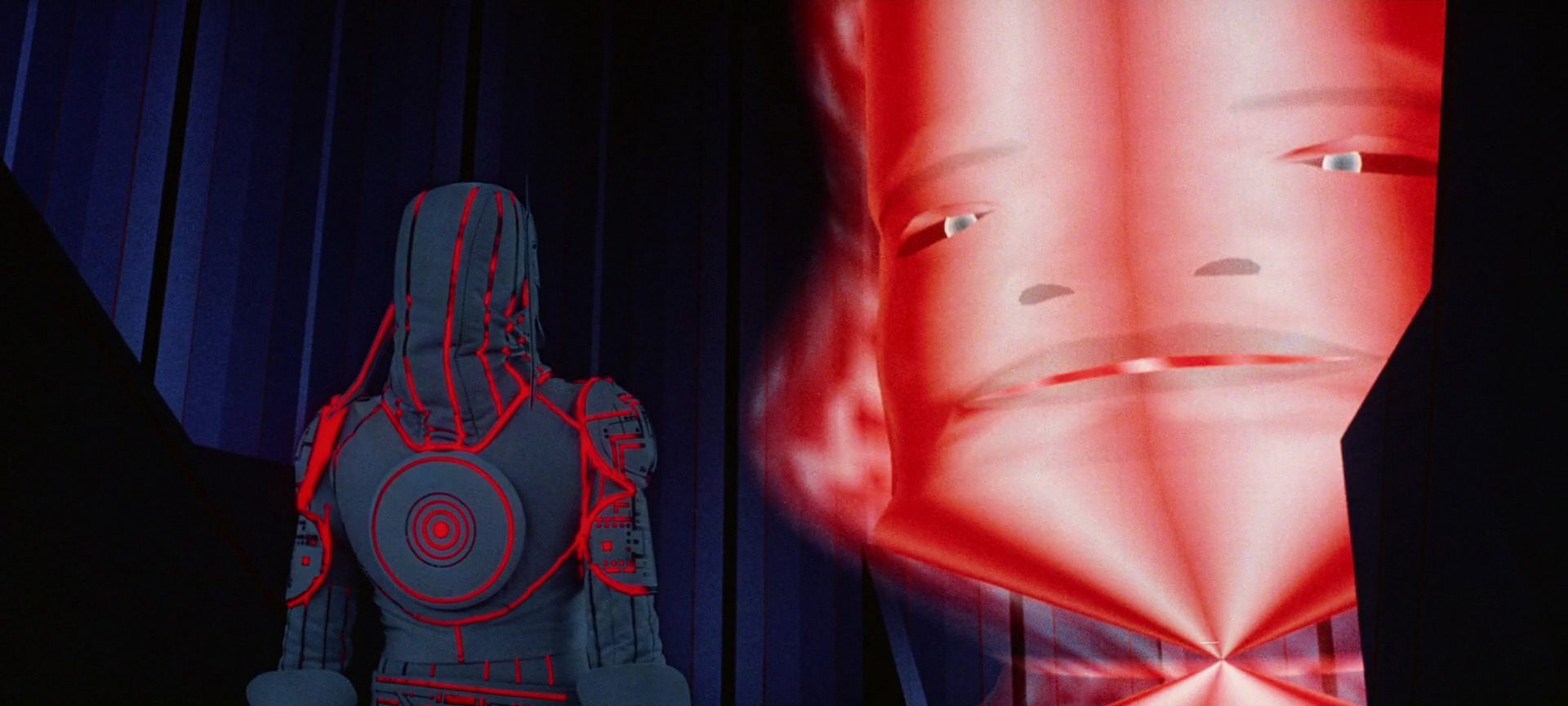

However, check this out. Let’s think about the Power of the MCP. And no, I don’t mean this guy:

I often have talked on Social Media about “GitHub Copilot in VSCode is like having a Junior Developer”. Having MCPs installed is like having had your Junior Dev take a class in a topic before they come work for you (note: you gotta run in Agent mode!). So, for example, I’m not writing these posts in WordPress. I write these in Markdown on my local OS, and in my VSCode has the MCP installed from WordPress. I can ask Copilot to just push the content up as a Post. It’ll create the Categories, Tags, update the formatting from Markdown to WordPress blocks, etc.

There’s a HUGE amount of MCPs, and they’re always growing. We’ll do a deeper dive on them in the future.

But, our relevant info here is that there are MCPs for GitHub, Azure, and more. So, with the above information, we actually now have enough information that VSCode can take over. I could do all the above steps manually.

So, I installed the GitHub MCP tools along with many Azure tools. We’ll need to take each part step-by-step, though, as there’s a 128 tool limit as of this writing.

Setting up the GitHub Knowledge Repo

Switching to VSCode GH CP, I could use all this above post as the education (plus my existing “briefing” document about the project). 😄

Reading the attached post, we need a new “Knowledge” repository in the GitHub Organization for “Nubimancy”. We’ll want to clone that to “./Knowledge”. Then we’ll want to create a README.md file in the repo to explain the purpose. After that, please review all the existing information in our “Knowledge” (INSTRUCTIONS.md) as well as the ./wordpress/pages/* for any extra info. Break all these pieces into separate md or ipynb files in the folder structure suggested.

It’s not super practical to share the “output” of VSCode’s chat here. But, it did:

- Analyzed the Knowledge I had

- Reviewed all the ‘pages’ data

- Created the Knowledge repo on Github

- Cloned the repo locally

- Updated the README to be relevant

- Created the CONTRIBUTING file

- Created (locally) the various folders we’ll want

- It then went through and created a TON of content in all of them

- It created and pushed the commit, which I have let it do to show all the work it did from my descriptions in the existing knowledge files. Check it out

This did take some time, and I cannot even imagine how many tokens I burned. 😆

And Now to Azure!

Let’s see how far we can go with MCPs. For this, I installed some Azure MCP server components, because there’s a bunch of things we’ll need to setup.

Of course, we’ll need a Resource Group for these new AI search related things. It sounds like, given the above, we’ll need Azure Blob Storage to throw the ‘data’ to, and then we’ll want some Azure AI Search setup. But, since I’m unsure how all this works, we’ll ask these things to go step by step. Per the guidance above, let’s ask it this way:

Now, using MCP tools, I need you to:

– Create a new Azure Resource Group for “Nubimancy-Ai-Blogging”

– We need a new Azure Blob Storage for “ProjectKnowledge”

– We need a GitHub action in our ./Knowledge repo to push all the knowledge files into the Azure Blob Storage (using good GitHub organization key/secret best practices)

The CoPilot first created the Azure Resource Group, and it pretty accurately detected that I would want it in Sweden Central (based on other Azure resources I already had).

It then tried to create the Blob Storage, but ran into an error with the Subscription ID. So, it ran the az account set --subscription [id] to set the default for following commands. It then tried again, still stumbling when trying to use the az cli, which failed. At that point, I interupted in frustration.

You keep using az commands – please try the MCP tools over any powershell or command line tools first.

It then switched to trying MCP commands, but reported the Azure Storage MCP tools only support listing storage.

Note: Important learning moment for me. I want to always use MCP tools whenever possible. From now on, I need the Copilot to think that way, and so much so, I’d actually rather it check my requests for MCP use and if the tools don’t support that action, then tell me before we start. A “pre-flight” review. Knowing what I know, this goes into my Instructions file.

To create a “custom instructions”, there’s a guide, but in short, in Command Pallette, do “Chat: New Instructions File”. Decide if it’s a per project (folder/workspace) instruction or if it’s such an important rule for yourself, you can make it per user.

I’m adding this to my instructions for this project, relying so heavily on MCP tools:

# Common Rules to Follow

– MCP Preference and Checking: When given instructions that mention using MCP, always check the complete prompt to see what MCP tools are available that will help with that request. Provide a ‘review summary’ (part by part, if ✅ MCP Supported or ❌ MCP Not Supported) as the first part of the response. If any parts are not supported, then ask for further clarification and offer what other options may work.

To test this behavior, I’m trying this as my prompt:

Now, using MCP tools, I need you to create a new Azure Blob Storage for “ProjectKnowledge”

This gives me a much better response, which I’ll share here in total because it’s perfect:

MCP Support Review Summary:

– ❌ MCP Not Supported: Creating Azure Blob Storage accounts is not directly supported by available MCP tools

– ✅ MCP Supported: Listing existing storage accounts via

mcp_azure_storage_storage_account_list– ✅ MCP Supported: Creating and managing blob containers once a storage account exists via

mcp_azure_storage_storage_blob_container_*toolsI see you want to create a new Azure Blob Storage for “ProjectKnowledge” using MCP tools. Unfortunately, the current Azure Storage MCP tools don’t support creating new storage accounts – they only allow listing existing accounts, and working with containers within those accounts.

Let me show you what we can do with the available MCP tools: The response indicates success but doesn’t show any accounts, suggesting either there are no storage accounts or the response formatting didn’t include them.

Alternative Options:

1. Azure Portal: Create the storage account manually through the Azure Portal

2. Azure CLI: Use the

az storage account createcommand3. Azure PowerShell: Use the

New-AzStorageAccountcmdlet4. ARM Template: Deploy a storage account using an ARM template

Would you like me to:

1. Help you create the storage account using Azure CLI or PowerShell instead?

2. Prepare a step-by-step guide for creating it through the Azure Portal?

3. Focus on the other parts of your workflow that are MCP-supported (like setting up containers once the storage account exists)?

Since we’re hitting a limitation of the MCP (common in July of 2025, we’re in the early adoption era), I have the choice: Do I try to push the Copilot to powershell/cli things or take it myself? This is why above we hit a weird seeming dead-end.

Let’s use the

az storage account createcommand, but make sure to include the “–subscription” parameter to avoid earlier errors.

Still errors. This, as it turns out, is an issue with Azure. My “Subscription” didn’t have “Resource Providers” configured. I’ll have to dive into THAT mess another day, but adding “Microsoft.Storage” as a “Registered” resource provider made the Azure command go through just fine. I told it simply to rry again, I’ve updated the settings on the Azure portal to allow it.

This time, Storage Account creation was fine, so it then created the Storage Container we’d need.

Based on this blog post (part of the joy of writing what’s happening and the goals in VSCode), it then jumped right into creating the GitHub Action for the synch.

It then created a Service Principle in Azure and generated the secret info we needed, which gave me all the contents as a JSON for the AZURE_CREDENTIALS secret for my repo Secrets. (Along with instructions to complete in GitHub to add it.)

Once I did that, I could click the Continue it offered to push the GitHub action commit. Commit

It looks good:

- On a push to main, if it’s a file type we care about, it’ll update the changed files

- On a manual call, it’ll push all the files (full sync) of the types we care about

I manually triggered the action, and sure enough, in my Azure Blob Storage, I have the nubimancyknowledge Storage Account, with a blob container of projectknowledge. Inside of that was effectively just a copy of the github repo, which is just fine for our purposes.

Since I’m doing this over multiple days, here’s a fun helper section for you (it’s a long post) and me:

Based on this post’s goals and description of steps taken, please note which setup steps are done ✅ and not yet done 🚧 in a list at the end of this post.

Setup Progress Summary

Based on the goals and steps described in this post, here’s a summary of what has been completed and what remains to be done:

Completed Steps ✅

- ✅ Created a GitHub repository for centralized knowledge

- ✅ Set up recommended folder structure in the Knowledge repo

- ✅ Created initial content files organized by topic areas

- ✅ Created README.md and CONTRIBUTING.md files

- ✅ Created Azure Resource Group for “Nubimancy-Ai-Blogging”

- ✅ Created Azure Blob Storage for “ProjectKnowledge”

- ✅ Set up GitHub Actions workflow to automatically sync Knowledge repo to Azure Blob Storage

- ✅ Registered Microsoft.Storage resource provider in Azure

- ✅ Created Service Principal and GitHub secrets for secure Azure access

- ✅ Successfully tested the GitHub Action for syncing content

Pending Steps 🚧

- 🚧 Set up Azure AI Search to index the content in the Blob Storage

- 🚧 Configure Azure AI Search skillset or embeddings generation for vector search

- 🚧 Connect Azure AI Search to Copilot Studio

- 🚧 Set up Copilot Studio to use knowledge base for grounding

- 🚧 Connect knowledge base to Azure AI Foundry

- 🚧 Create sample Copilot Notebooks that reference the knowledge base

- 🚧 Implement semantic enrichment with frontmatter and metadata

- 🚧 Set up automated indexing triggers for Azure AI Search when Blob Storage is updated

The most critical next step would be setting up Azure AI Search to index the content now stored in the blob container, followed by connecting that search index to the various Copilot tools (Studio, Foundry) to enable knowledge grounding across platforms.

Works for all of us, I bet!

Please review the Azure Search MCP tools you have access to and see if you have access to the tools needed to set up and configure what we need to do next. If so, just confirm what you’ll do. If not, let’s have a small guide on what I should do myself in the Azure Portal, please. (Output in chat, not the post.)

The bad news is that the Azure Search MCP is still mostly read only for now. But since I asked, the guide on what to setup next written nicely out for me, which is good, since I’m unfamiliar with AI Search:

Azure AI Search Setup Guide

1. Create an Azure AI Search Service

- Log in to the Azure Portal

- Navigate to your “Nubimancy-Ai-Blogging” resource group

- Click “+ Create” and search for “Azure AI Search”

- Configure the service:

- Name: “nubimancyknowledge-search” (or similar)

- Location: Same as your blob storage (Sweden Central)

- Pricing tier: Start with “Basic” for testing, can upgrade later (or “Standard” if you need vector search capabilities)

2. Connect to Blob Storage and Create an Indexer

- Open your new Azure AI Search service

- Go to “Import data”

- Select “Azure Blob Storage” as your data source

- Connect to your “nubimancyknowledge” storage account and “projectknowledge” container

- Configure data source settings:

- Data source name: “knowledge-blob-datasource”

- Connection string: Use the connection string for your storage account

- Container: “projectknowledge”

- Blob folder path: Leave blank to index all folders

- Blob type: “Content and metadata”

3. Configure Index Settings

- Name your index (e.g., “knowledge-index”)

- Configure key fields:

- Set “metadata_storage_path” as the key (typically the default)

- Select fields to index like content, metadata, etc.

- Set appropriate retrievable, filterable, sortable, and searchable properties

- For Markdown files, ensure text extraction is properly configured

4. Configure Enrichment (Skillsets)

- Add cognitive skills as needed:

- Text extraction (for all document types)

- OCR (if needed for images or PDFs)

- Key phrase extraction

- Entity recognition

- Language detection

5. Create the Indexer

- Name your indexer (e.g., “knowledge-indexer”)

- Configure the schedule (e.g., once daily)

- Advanced options:

- Configure field mappings if needed

- Set up change detection policy (recommended)

- Configure error handling

6. Optional: Set Up Vector Search

If using a Standard tier or higher:

- Create an Azure OpenAI service in the same region

- Configure vector fields in your index

- Set up embedding models and vector configuration

But, this post is starting to get pretty long. Let’s wrap up here and continue later. Especially after I do some research and ask around about Azure AI Search pricing, as it appears to be a little steep.

Leave a Reply